I just spent about a week trying to sort out why people kept claiming that surfaces with a given radiance (or luminance) emit pi times as much light over a hemisphere, when there are in fact 2*pi steradians in a hemisphere. Here's what I learned. Special thanks to my friend Mike for helping me figure it out!

Quick overview of light units:

Radiance / Luminance (and their neighbors) are analogous to each other. The radiance units weight photons based on their energy, while the luminance units weight photons based on human visual response.

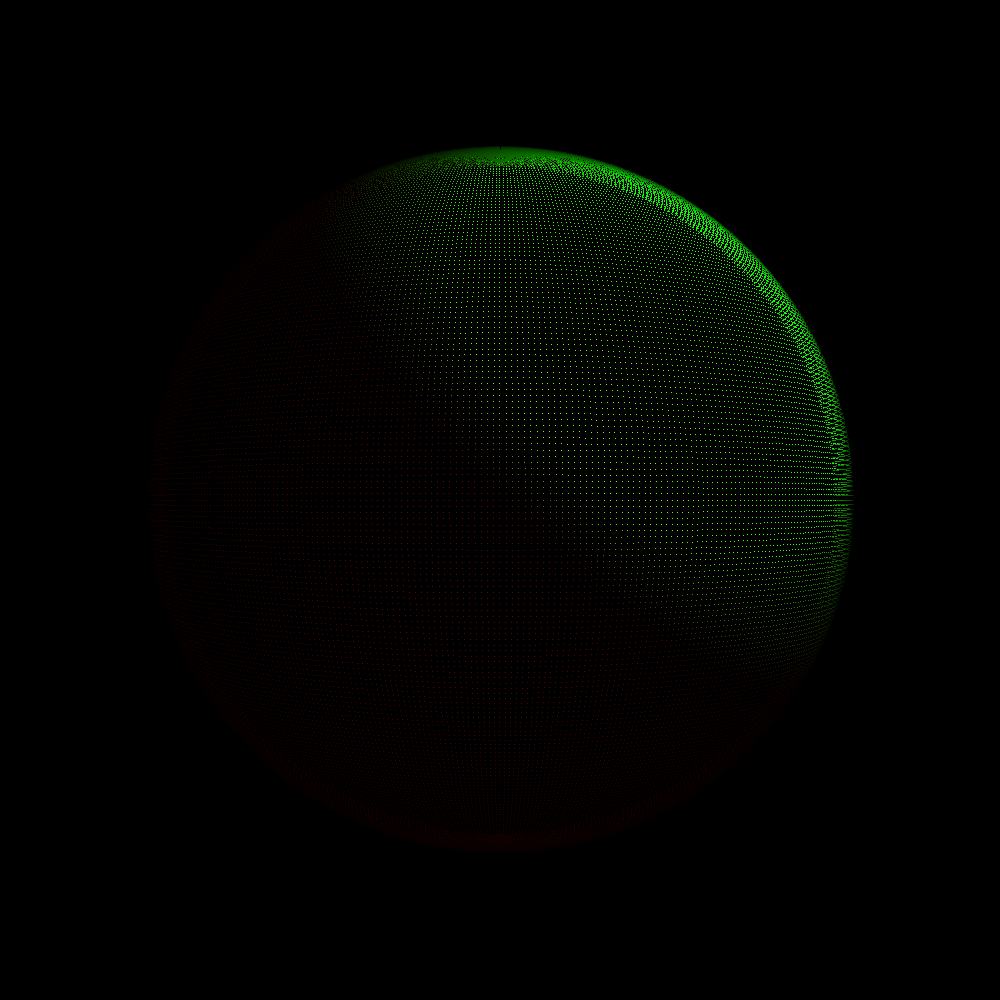

![]()

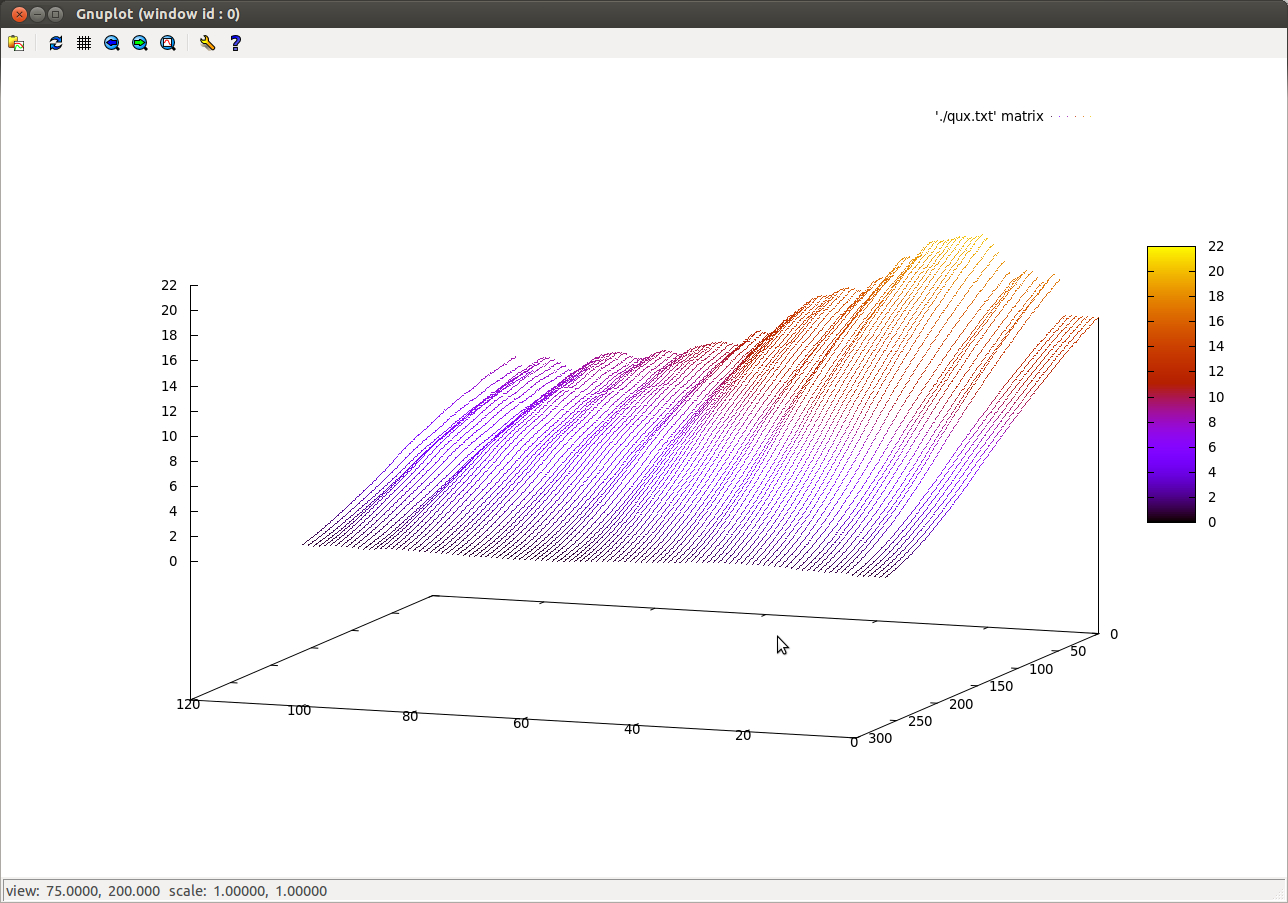

![]()

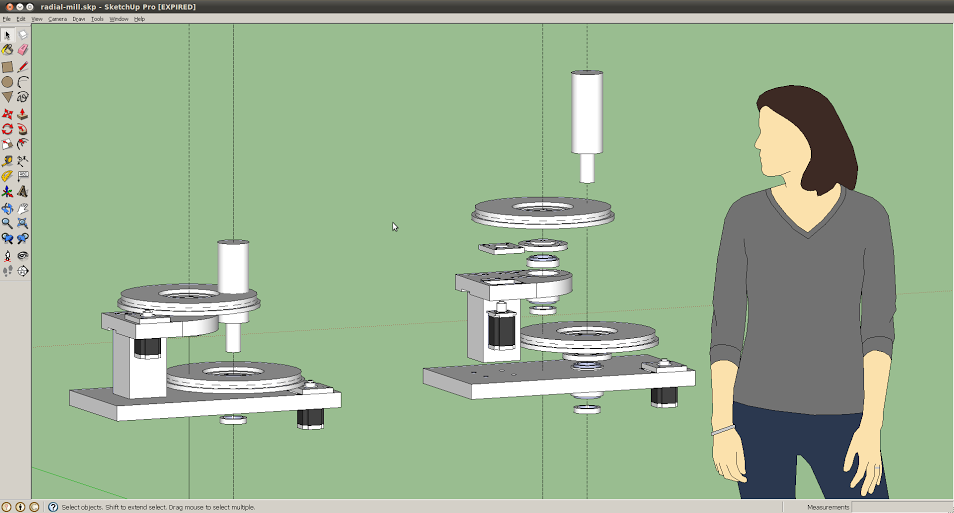

![]()

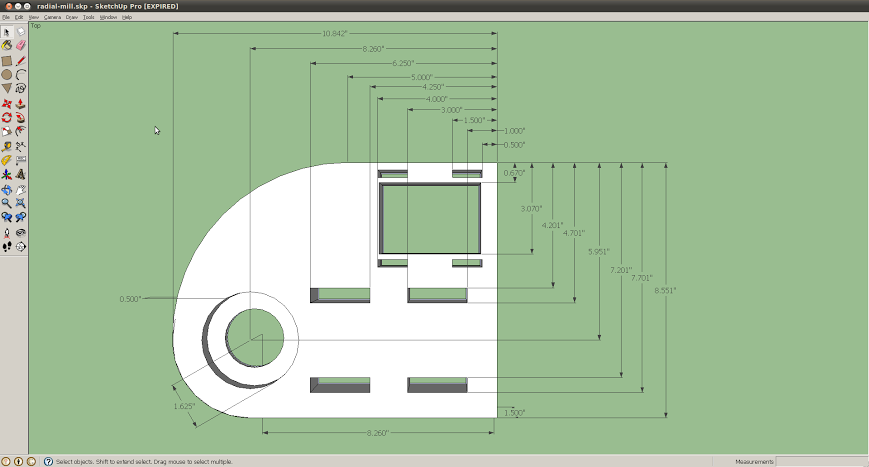

![]()

Quick overview of light units:

Radiance / Luminance (and their neighbors) are analogous to each other. The radiance units weight photons based on their energy, while the luminance units weight photons based on human visual response.

Radiant flux (Watt) / luminous flux (Lumen): "I have a 1W LED"

Radiant intensity (Watt per steradian) / luminous intensity (Candela): "I have a 1W LED focused in a 33 degree cone"

Irradiance (Watt per square meter) / illuminance (Lux): "I put my 1W LED in a soft box whose front surface is 1m by 1m"

Radiance (Watt per square meter per steradian) / Luminance (Nit, or cd / m^2): "I measure 1uW of light being emitted from a 1 mm square patch on my soft box into a 33 degree cone perpendicular to its surface" BUT with a major catch (read on)

Leading up to The Major Catch:

Incident light meters (the ones with a white dome) collect light from a broad area and report it as lux. I think of it as "if I had an ideal 1 square meter solar cell, this is how many watts it'd put out (or how many photons it'd receive per second) given the current ambient lighting".

Reflected light meters (when you look through the 1 degree eyepiece) tell you how much light is coming off of a surface. If you're shooting something with a dark color, you'd rather know how much light is coming off it than how much it's receiving, since you probably don't know its BRDF. Reflected light meters report in nits, or candela per square meter, or to expand fully: lumens per steradian per square meter.

This Sekonic L-758Cine is the one I have. (Its lower priced siblings, unfortunately, won't report in lux or cd/m^2, but only in terms of camera settings)

The Major Catch:

So intuitively, we should be able to use an incident light meter to measure the ambient light coming off, say, a wall, and get, say, 2*pi lux. We could (wrongly) assume it's emitting equally in all directions, and since we know that there are 2 pi steradians in a hemisphere, say that the wall has luminance of 1 lumen per square meter per steradian.

That actually seems completely reasonable, so I'm not sure why I felt the need to check that experimentally, but I'm glad I did. I turned on a big soft box and measured a tiny patch of it using the reflected light meter. Then I switched to incident mode and put the dome right up next to the center of the box, expecting the lux to be 2*pi as large as the nits.

But that's not what I got. In fact it was closer to a ratio of 2, but if I squinted just right I could get a ratio of pi. But definitely not 2 pi. I repeated the experiment on a nearby wall and got even closer to a ratio of pi. WTF?

Searching online I came across quite a few instances of people saying that the ratio of illuminance to luminance is indeed pi for a flat Lambertian surface. Now that really baked my noodle. How could a surface emit a certain number of photons per second per steradian into a 2 pi steradian hemisphere and come up with anything other than 1/(2pi) per steradian? And what's the deal with Lambertian surfaces?

Lambertian surfaces are surfaces that have the same brightness no matter what your angle to their normal is. That means they're isotropic emitters, right? Nope! Look at a business card square on, then angle it away from you. Your eye perceives it as having the same brightness in both cases, but in the second case, the card subtends a smaller angle in your vision even though you're still seeing the entire card. If each photon were to leave the card in a uniformly random direction, you'd receive just as many photons from the card when you viewed it obliquely as you did when you viewed it straight on. Since it's foreshortened when you view it from an angle, you'd get the same number of photons in a smaller solid angle, and the card would appear brighter and brighter (more photons per steradian) the more you angled the card.

So for the card to have the same brightness at any angle and thus qualify as a Lambertian surface, the card must emit less light the further away from perpendicular you get, to compensate for the fact that the card's solid angle is shrinking in your view. That brightness reduction turns out to be cos(theta), where theta is the angle between your eye and the surface normal.

Page 28 of the Light Measurement Handbook has some good diagrams and explanation on this topic: http://irtel.uni-

It turns out that lots of things in nature are more or less Lambertian surfaces, which is pretty convenient for us -- it means things look about the same as we walk past them. And it means we have an accurate sense of how bright something is regardless of what angle we're seeing it from. It's interesting to imagine a world where most surfaces were very non-Lambertian and wonder how that might screw up us carbon-based lifeforms. But I digress.

Imagine a 1m^2 patch of glowing lambertian ground covered by a huge hemispherical dome. The patch of dome directly above our glowing ground will receive the most light, and the perimeter (horizon) of the dome will get none, since it's looking at the ground on-edge. When aimed straight up, let's say we get radiance of 1W per square meter per steradian, falling to zero at the horizon. (Note that we could aim our reflected light meter at the patch from anywhere -- regardless of angle or distance -- and read the same value, thanks to the fact that the surface is lambertian. The reduced light as we go toward the horizon will be perfectly balanced out by the foreshortened view of the patch, so the meter will report a constant value of lumens per steradian per square meter).

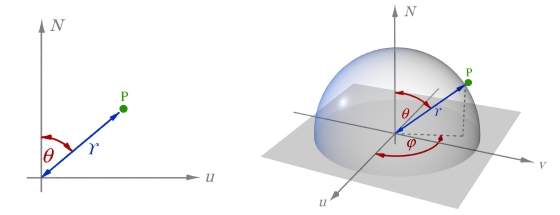

Let's integrate over the dome to find the total emitted power. We'll slice the dome with lines of latitude, so that the bottom slice is a ring touching the ground, with a slightly smaller diameter ring on top of it, going up toward the north pole as the rings get smaller and smaller. Kind of like slicing up a loaf of bread and then putting the end of it heel-up on the table.

The point in the middle of our glowing patch of ground is the center of the hemisphere. Let theta be the angle between the north pole and the circumference of a given ring. Then the radius of that ring is sin(theta), and its circumference is 2*pi*sin(theta).

The width of the ring is d*theta, and the radiance of the light hitting the ring from the glowing patch is cos(theta). Multiply them together and we get power radiated through the ring. Add it up for all the rings and we capture all the power being emitted by the glowing patch:

So our lambertian surface with radiance 1W / m^2 / sr emits a total of pi watts over a hemisphere, even though a hemisphere consists of 2 pi steradians, and even though we can measure that 1W / m^2 / sr radiance from any angle! The reason that seeming paradox happens is that the constant radiance is a result of less light over a smaller angle. The north pole sees the entire patch and thus gets the most light, whereas the horizon sees the patch from the edge and sees only a thin sliver of light. Add it all up, and you get half as much light as if the dome were being uniformly lit everywhere.